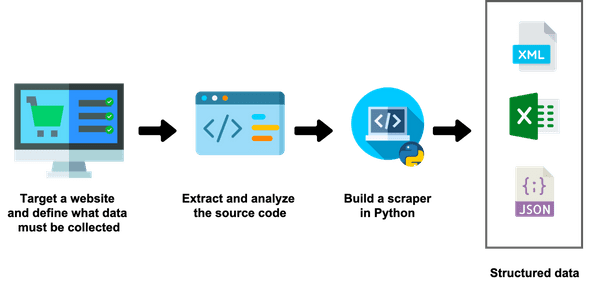

A lot of people at different levels of an organization may need to collect external data from the internet for various reasons: analyzing the competition, aggregating news feeds to follow trends in particular markets, or collecting daily stock prices to build predictive models…

Whether you’re a data scientist or a business analyst, you may be in this situation from time to time and ask yourself this ever-lasting question: How can I possibly extract this website’s data to conduct market analysis?

One possible free way to extract website data and structure it is scraping. In this post, you’ll learn about data scraping and how to easily build your first scraper in python.

1 - What is data scraping? 🧹

Let me spare you long definitions.

Broadly speaking, data scraping is the process of extracting a website’s data programmatically and structuring it according to one’s needs. Many companies are using data scraping to gather external data and support their business operations: this is currently a common practice in multiple fields.

What do I need to know to learn data scraping in python?

Not much. To build small scrapers, you’ll have to be a little bit familiar with Python and HTML syntaxes.

To build scalable and industrial scrapers, you’ll need to know one or two frameworks such as Scrapy or Selenium.

2 - Build your first scraper in Python

Setup your environment

Let’s learn how to turn a website into structured data! To do this, you’ll first need to install the following libraries:

- requests: to simulate HTTP requests like GET and POST. We’ll mainly use it to access the source page of any given website.

- BeautifulSoup: to parse HTML and XML data very easily

- lxml: to increase the parsing speed of XML files

- pandas: to structure the data in dataframes and export it in the format of your choice (JSON, Excel, CSV, etc.)

If you’re using Anaconda, you should be good to go: all these packages are already installed. Otherwise, you should run the following commands:

pip install requests

pip install beautifulsoup4

pip install lxml

pip install pandasTo make people easily follow along with my video tutorial, I also used a jupyter notebook to make the process interactive.

What website and data are we going to scrape?

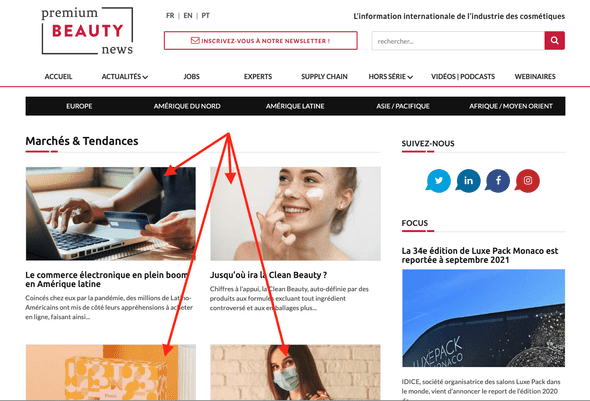

One friend of mine asked me if I could help him scrape this website. So I decided to do it in a tutorial.

This website is called Premium Beauty News. It publishes recent trends in the beauty market. If you look at the front page, you’ll see that the articles that we want to scrape are organized in a grid.

Over multiple pages:

Of course, we won’t extract the header of each article appearing on these pages only. We’ll go inside each post and grab everything we need:

the title, the date, the abstract:

And of course the remaining full content of the post.

Show me the code!

Because that’s why you’re here, right?

Basic imports first:

import requests

from bs4 import BeautifulSoup

import pandas as pd

from tqdm import tqdm_notebookI usually define a function to parse the content of each page given its URL. This function will be called multiple times. Let’s call it parse_url:

def parse_url(url):

response = requests.get(url)

content = response.content

parsed_response = BeautifulSoup(content, "lxml")

return parsed_responseExtracting each post data and metadata

I will first start by defining a function that extracts the data of each post (the title, date, abstract, etc) given its URL. Then we’ll later call this function inside a for loop that goes over all the pages.

To build our scraper, we first have to understand the underlying HTML logic and structure of the page. Let’s start by extracting the title of the post.

By inspecting this element on Chrome inspector:

we notice that the title appears inside an h1 of the class “article-title”. After extracting the content of the page using BeautifulSoup, extracting the title can be done using the find method.

title = soup_post.find("h1", {"class": "article-title"}).textLet’s now look at the date:

The date appears inside a span, which itself appears inside a header of the class “row sub-header”. Translating this into code is quite easy using BeautifulSoup:

datetime = soup_post.find("header", {"class": "row sub- header"}).find("span")["datetime"]Regarding the abstract:

it looks like it’s contained in an h2 tag of the class “article-intro”.

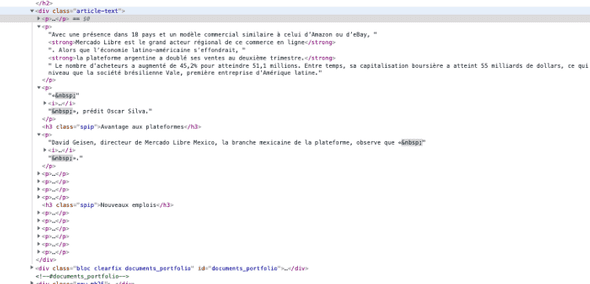

abstract = soup_post.find("h2", {"class": "article-intro"}).textNow, what about the full content of the post? It’s actually pretty easy to extract. This content is spread over multiple paragraphs (p tags) inside a div of the class “article-text”.

Instead of going through each individual p tag, extracting its text, and then concatenating all the texts, BeautifulSoup can extract the full text in one way like this:

content = soup_post.find("div", {"class": "article-text"}).textLet’s package everything in a single function:

def extract_post_data(post_url):

soup_post = parse_url(post_url)

title = soup_post.find("h1", {"class": "article-title"}).text

datetime = soup_post.find("header", {"class": "row sub-header"}).find("span")["datetime"]

abstract = soup_post.find("h2", {"class": "article-intro"}).text

content = soup_post.find("div", {"class": "article-text"}).text

data = {

"title": title,

"datetime": datetime,

"abstract": abstract,

"content": content,

"url": post_url

}

return dataExtracting posts URLs over multiple pages

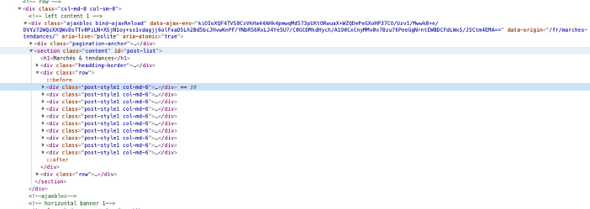

If we inspect the source of the home page, where articles are shown with their headlines,

we’ll see that each of the ten posts appearing in the grid is inside a div of class “post-style1 col-md-6” which is itself inside a section of class “content”.

Extracting posts per page is therefore quite easy:

url = "https://www.premiumbeautynews.com/fr/marches-tendances/"

soup = parse_url(url)

section = soup.find("section", {"class": "content"})

posts = section.findAll("div", {"class": "post-style1 col-md-6"})Then, for each individual post, we can extract the URL which appears inside an “a tag” that is itself inside an h4. We’ll use this URL to call our previously defined function extractpostdata.

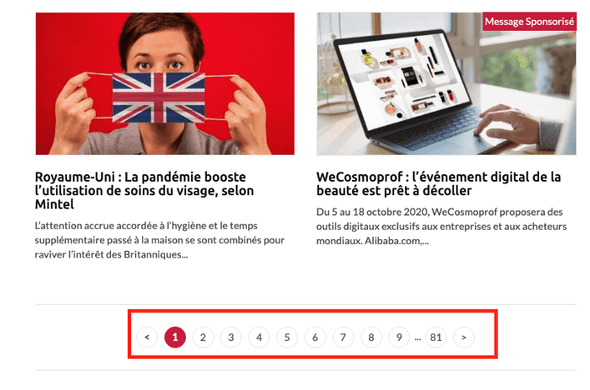

uri = post.find("h4").find("a")["href"]Paginating

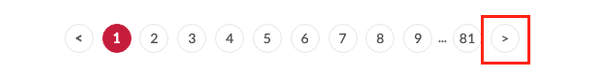

Once the posts are extracted on a given page, you may want to go to the next page and repeat the same operation.

If you look at the pagination, you’ll notice a “next button”:

This button becomes inactive once you reach the last page. Put differently, while the next button is active, you have to tell the scraper to grab the posts of the current page, move to the next page and repeat the operation. When the button becomes inactive, the process should stop.

Wrapping up this logic, this translates into the following code:

next_button = ""

posts_data = []

count = 1

base_url = 'https://www.premiumbeautynews.com/'

while next_button is not None:

print(f"page number : {count}")

soup = parse_url(url)

section = soup.find("section", {"class": "content"})

posts = section.findAll("div", {"class": "post-style1 col-md-6"})

for post in tqdm_notebook(posts, leave=False):

uri = post.find("h4").find("a")["href"]

post_url = base_url + uri

data = extract_post_data(post_url)

posts_data.append(data)

next_button = soup.find("p", {"class": "pagination"}).find("span", {"class": "next"})

if next_button is not None:

url = base_url + next_button.find("a")["href"]

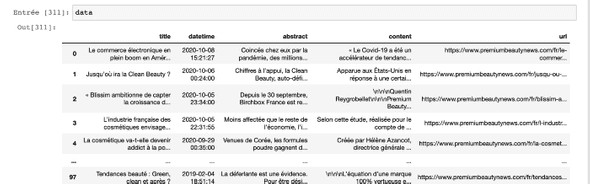

count += 1Once this loop completes, you’ll have all the data inside posts_data, which you can turn it into a beautiful dataframe and export to CSV or Excel file.

df = pd.DataFrame(posts_data)

df.head()

Thanks for reading for staying till the end! If you’re interested in the video recording of this tutorial, here is the link:

Where to go from here?

You just learned how to build your first scraper, congratulations!

Now if you want to improve it you may think about these next steps:

- Multiprocessing and multithreading the execution of the script

- Scheduling the run of the scraper over periods of time, to automate data scraping

- Handle error — scrapers are hard to maintain over time because the source code may change

- Deploy a database or an s3 bucket to store the scraped items

This may get you busy for a while! Happy data scraping!